Beelink GTR9 Pro AMD Ryzen™ AI Max+ 395: From First Impressions to NIC BSODs and the Road to Stability

Beelink GTR9 Pro AMD Ryzen™ AI Max+ 395 Review and Troubleshooting Journey

When I ordered the Beelink GTR9 Pro AMD Ryzen™ AI Max+ 395, the plan was clear: to use it as a compact AI workstation for running local models and as a testbed for offline inference workloads. On paper, it looked excellent — AMD’s Ryzen AI MAX 395 CPU, LPDDR5 memory with support for up to 128 GB, PCIe 4.0 NVMe storage, and, critically, dual Intel 10 GbE E610-XT2 network adapters1. For AI workloads requiring both CPU acceleration and high-throughput networking, this configuration was difficult to ignore.

What follows is a detailed account of my experience — from unboxing and setup, through the first stability issues, to extended troubleshooting with Beelink and Intel, and finally, the configuration changes that initially delivered a stable, quiet, and performant system.

Update 1 (October 2025):

Following the original publication, I continued investigating persistent firmware lock-ups and system crashes affecting the integrated Intel E610-XT2 NICs. Multiple independent users on Reddit have since reproduced the same behaviour on their GTR9 units, confirming this as a systemic fault rather than an isolated hardware failure.

Despite firmware and driver alignment, the NICs remain unstable under sustained mixed GPU + network workloads. After extended correspondence with both Beelink and Intel, I returned the unit for a refund and will continue tracking any future firmware or hardware revisions that address the defect.

Update 2 (October 2025): Possible workaround found by LukeD_NC over on Reddit, included information in the community workaround section of this post.

Update 3 (October 2025): Possible workaround found for some Linux users over on Reddit, included information in the community workaround section of this post, credit to LukeD_NC over on Reddit.

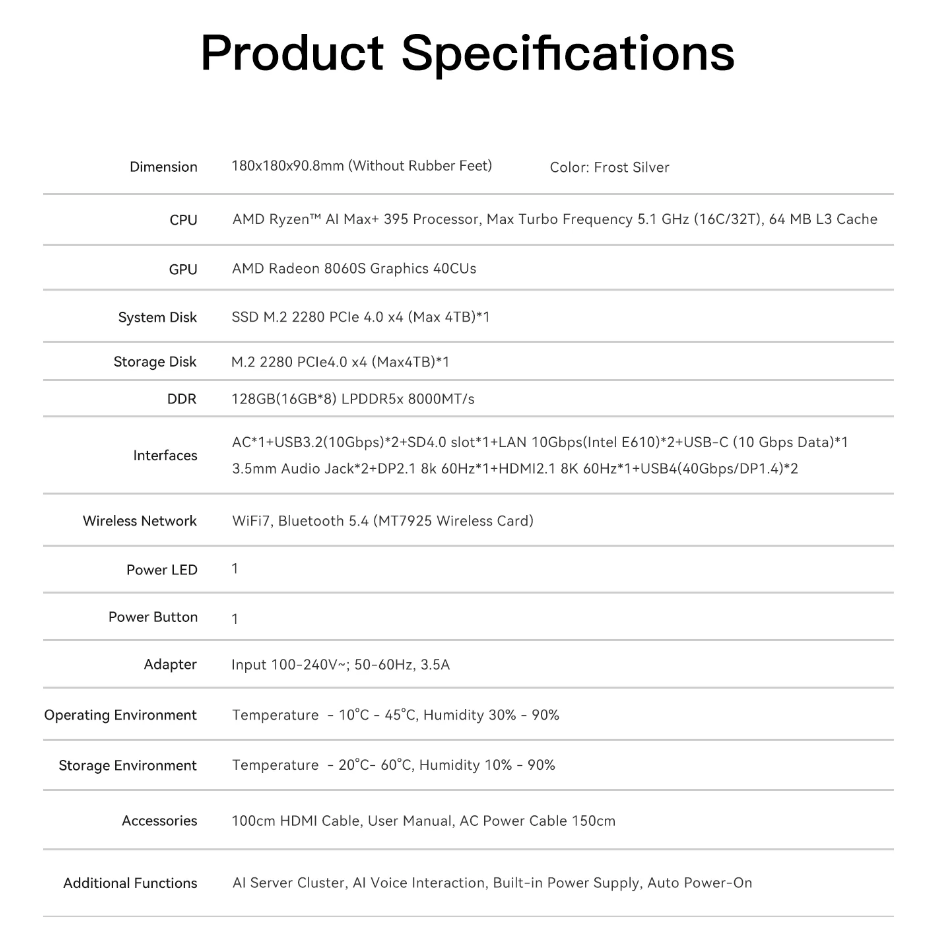

Device Specifications

To establish context, the key specifications of the AI 395 as shipped are summarised below:

- CPU: AMD Ryzen AI MAX 395, 16 cores, integrated NPU for AI acceleration.

- Memory: 128 GB LPDDR5 8000 MT/s RAM.

- Storage: Dual M.2 2280 NVMe slots, PCIe 4.0.

- Networking: Dual Intel E610-XT2 10 GbE NICs, plus Wi-Fi 7.

- Graphics: AMD Radeon 8060S 40CUs.

- Ports: HDMI 2.1, USB4, USB 3.2, USB-C, SD4.0 Slot, 3.5 mm audio.

- Chassis: CNC-machined aluminium, compact SFF footprint.

On paper this makes the system appealing for AI research, home labs, and as a compact edge node. But as always, specifications don’t tell the whole story.

Unboxing and First Impressions

The AI 395 arrived well packed, with the usual accessories: power cable, HDMI cable, and quick-start guide. The finish is impressive — brushed aluminium, a weighty feel, and a design language that draws comparisons with a Mac Mini.

Booting for the first time, the device shipped with Windows 11 preinstalled. Setup was smooth; I connected over Wi-Fi, performed Windows updates, and installed the latest AMD drivers. No issues emerged during this phase. The cooling system was louder than I expected, but overall idle temperatures remained reasonable.

The first workloads tested were local LLMs via LM Studio. Over Wi-Fi the device was stable, delivering the expected throughput. Things changed the moment I switched from Wi-Fi to the 10 GbE NICs.

The First BSODs

Within minutes of sustained network load and GPU usage, the system crashed with a SYSTEM_THREAD_EXCEPTION_NOT_HANDLED (0x7E) in ixw.sys. Configuring full memory dumps confirmed a null pointer dereference inside the Intel ICE driver. Each crash caused the NIC LED to freeze green, the adapters locked up and vanished from Device Manager. Only a full power cycle restored functionality.

This behaviour was reproducible and consistent, making the NICs unusable for any workload.

Here is the summary of the issue:

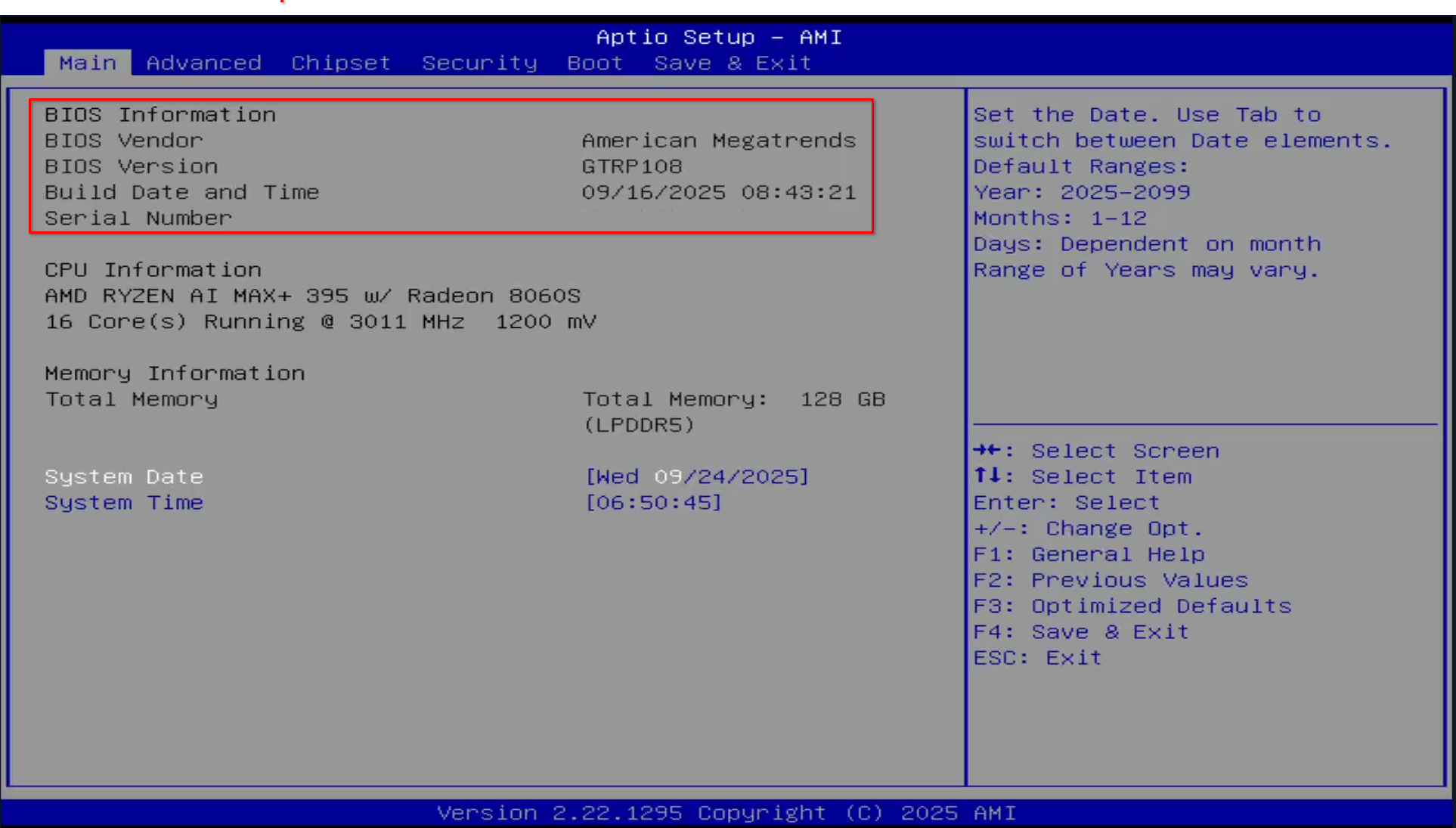

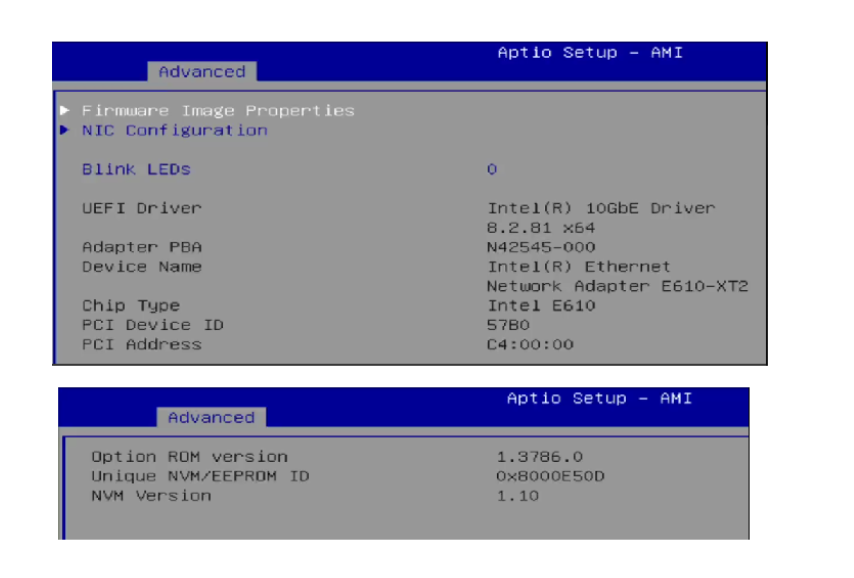

- Beelink AI 395 with an Intel Ethernet Network Adapter E610-XT2 (PCI\VEN_8086&DEV_57B0).

- NIC firmware: NVM 1.10, Option ROM 1.3786.0

- Driver: ixw.sys 1.2.43.0 from Intel Ethernet Pack 30.0.1

- OS: Windows 11 (build 26100)

Problem: After a couple of minutes under load the system hits a SYSTEM_THREAD_EXCEPTION_NOT_HANDLED (0x7E) in ixw.sys. When it crashes the NIC LED stays solid green, Windows no longer sees the adapter, and only a full power cycle brings it back.

BugCheck Review:

Crash Summary

System: Windows 11 build 26100 x64

BIOS Revision: 5.36.0.0

Adapter: Intel® E610-XT2 (ICE driver family)

Firmware: NVM 1.10, Option ROM 1.3786.0

Driver: ixw.sys (ICE), build timestamp 27 Dec 2024, from Intel Ethernet Adapter Complete Driver Pack 30.0.1

Hyper-V: Present/enabled

Bugcheck

Stop code: SYSTEM_THREAD_EXCEPTION_NOT_HANDLED (0x1000007E)

Exception: 0xC0000005 (Access violation – invalid memory reference)

Faulting IP: ixw.sys+0x44B9E → attempted to read from 0x8 (NULL dereference)

Process: System thread (ExecutionContext DispatcherThread)

Stack trace (trimmed):

ixw+0x44b9e

nt!ExFreePoolWithTag

ExecutionContext!UnregisterNotificationTaskCallback

ExecutionContext!HandleKWaitTask

ExecutionContext!DispatcherThread

nt!PspSystemThreadStartup

nt!KiStartSystemThread

The crash originates inside the Intel ICE driver (ixw.sys), during a callback teardown/unregister path. A freed or NULL pointer is dereferenced (RCX=0), causing a use-after-free / double-unregister condition. This is a known class of issues when modern ICE drivers (≥1.11.x) are used with outdated NVM firmware (<3.0) on E610/E810 adapters. The NIC’s very early firmware baseline (NVM 1.10) does not align with current driver expectations, leading to BSODs under Windows.

Contacting Beelink Support

I raised a support case with Beelink. My first messages included the system serial number, reproduction steps, and analysis of the dumps. Their early replies asked for photos of the device and BIOS screens, then acknowledged the NIC issue:

“Currently, the network card issue of the GTR9 395 persists, and its root cause lies in the Ethernet driver… A complete resolution to this issue will only be possible once a new driver is released.” — Beelink Support, September 2025

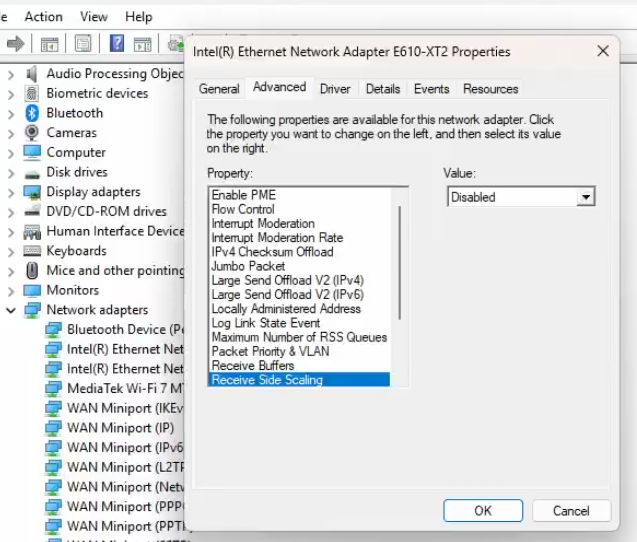

Beelink later provided a BIOS update (v1.08), which was not listed publicly. Applying it introduced minor changes but did not fix the BSODs. I also attempted to change the NIC settings removing some more common issues. I tried disabling offloads (RSS, LSO, checksum, interrupt moderation) and rolling back drivers, but the issue still happens and the BSDO consistently show null pointer dereference inside ixw.sys.

Driver and Firmware Investigation

At this point I reviewed Intel’s release notes. The drivers bundled in Intel’s Ethernet Pack 30.0.1 required matching NIC firmware. The AI 395 shipped with NVM 1.10, while Intel’s documentation showed that NVM 1.30 or higher was required for the 30.x driver branches. This mismatch was a possible reason for the crashes.

I tested the firmware with the intel tool “nvmupdate64e.exe -i” did not return any firmware details, which made me think the firmware maybe OEM locked. I confirmed the firmware version in the BIOS. This occurs because the Beelink-integrated E610 NICs expose a shared NVM image through a composite PCIe function, which the Intel NVM tool interprets as a single logical adapter.

The catch: the NICs were onboard. If firmware was vendor-locked, applying Intel’s generic NVM update could brick the NICs or even the device itself. I shared this with Beelink, who confirmed continued to acknowledge the issue and that it would be fixed by a driver update, then suggested waiting for Intel to release fixes. The current workaround provided was to not use the NICs; this was not option as I need them for my work.

Intel Forum and Escalation

The next stage was to escalate the issue to Intel directly. I raised the case on Intel’s community forums2. Intel engineers confirmed the issue:

- The crash originates in the ICE driver when paired with outdated NVM.

- NVM 1.10 is too old for the 30.x drivers.

- Updating to NVM 1.30 was the next option to move forward, but since the adapters were OEM, the firmware had to come from Beelink.

I relayed this back to Beelink and also told them that if we wait for the driver, the firmware would still not be updated and the issue would return. After further correspondence, they finally provided the NIC firmware update. With NVM 1.30 installed and drivers updated to the 30.4 pack, the BSODs disappeared.

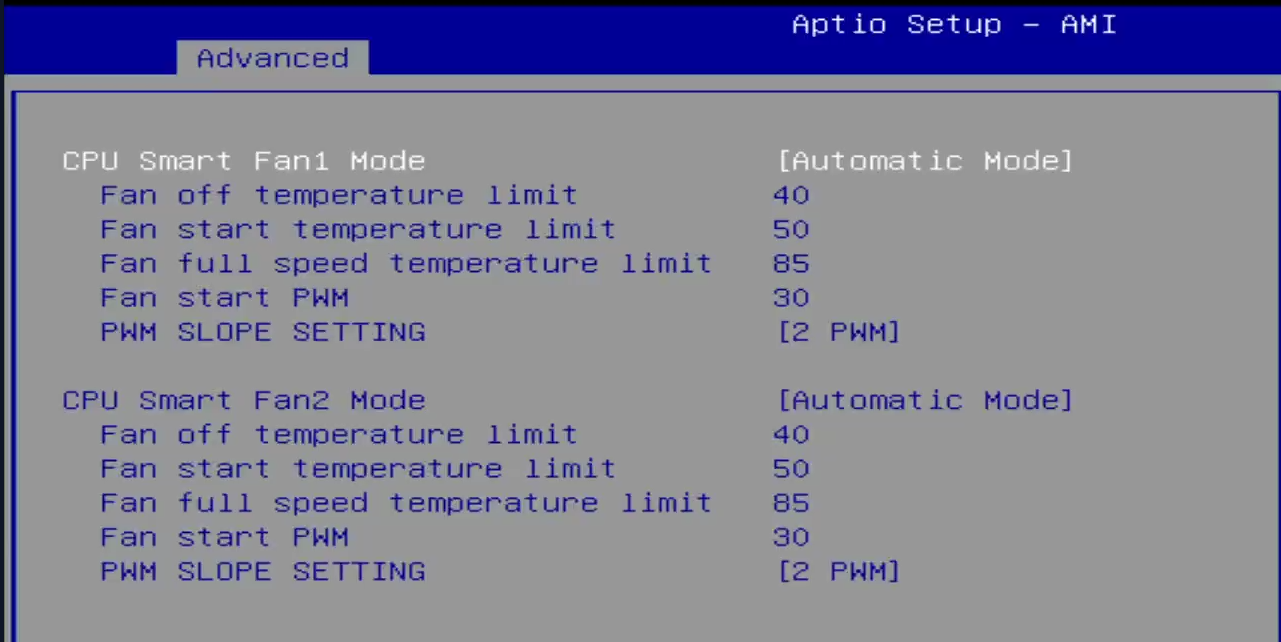

System Tuning

With stability restored, I tuned the device for its intended use:

-

BIOS Power Mode: Switched from “balanced” to “performance”, unlocking full CPU potential. This allows me to push the system to the full 140 W of power.

-

Fan Curve: Changed from constant 2000 RPM to a graduated profile. The system now idles quietly at 40–50 °C, ramping only under load. This reduced the fan noise. The fans now ramp up to 1800 RPM under load and peak at 3800 RPM at high temp. This ensures that the system stays cool and responsive. The fan settings are located under “Hardware Monitor” -> “Smart Fan Function”.

-

NIC Settings: Updated Windows driver configuration to align with standard networking recommendations (RSS, LSO, interrupt moderation adjusted).

These changes made the system far more usable: stable under network load, quiet at idle, and performant under sustained AI inference.

Geekbench Results

With the system in Performance mode (BIOS v1.08), NIC firmware updated (E610 NVM 1.30) and Intel Driver Pack 30.4, Geekbench completed without instability. The table summarises CPU, OpenGL, and Vulkan outcomes for the representative run set on <2025-09-25>; ambient was 21°C and no thermal throttling was observed.

| Test | Score | Tooling / Version |

|---|---|---|

| CPU | Single-Core - 2983 / Multi-core: 22483 | Geekbench 6.5.0 |

| OpenGL | 96136 | Geekbench 6.5.0 |

| Vulkan | 84303 | Geekbench 6.5.0 |

Test conditions: Windows 11 build 26100; AMD graphics driver 25.9.1; Intel NIC driver ixw.sys 1.2.43.0 (Pack 30.4); fan profile tuned (idle 40–50 °C, full ramp at 85 °C).

Failing NIC followed me to Linux

Once I had Windows fully running, I made the switch over to Linux, which I will cover in another post. I found the network card issue followed me over to Linux. Once my Linux install was complete, the system appeared to be stable, with no issues related to network connectivity or performance. However after a period of time I noticed the NIC dropping connections. I confirmed the same fault as in Windows, the NIC lights became solid and would not reset. I started the same troubleshooting steps I found in Windows, validated Firmware and Driver match. Since I had the firmware correct in Windows, the driver must be outdated.

Intel NIC Driver Checks and Updates on Linux

When working with Intel NICs on Linux, particularly the E610/E810 series, it is important to verify both the driver and the firmware (NVM) currently in use. A mismatch or outdated module can result in missing features, poor performance, or even interface instability.

Note: Although Intel’s E610 series belongs to the “ICE” family in Windows packaging, its Linux support is implemented under the ixgbe driver (not ice). This is expected behaviour — the E610 shares lineage with the X550/X540 rather than the E810 “ICE” stack.

Step 1 — Check the active driver

Use ethtool to identify which kernel driver is bound to the interface and its version:

sudo ethtool -i enp196s0f1

Example output:

driver: ixgbe

version: 6.14.0-1012-oem

firmware-version: 0.00 0x0 0.0.0

expansion-rom-version:

bus-info: 0000:c4:00.1

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: yes

Key fields:

- driver → the kernel module in use (

ixgbein this case). - version → the driver version (shows whether you are using the in-kernel driver or an out-of-tree update).

- firmware-version → the NIC’s NVM firmware level.

Notice the firmware is reporting as 0.00, this points to a driver issue, which is why you need to update the driver.

Step 2 — Confirm which module is loaded

To ensure you are not still running the OEM-shipped module, check which .ko is backing the driver:

modinfo ixgbe | grep -E 'filename|version'

A correct out-of-tree installation should show something like:

filename: /lib/modules/6.14.0-1012-oem/updates/drivers/net/ethernet/intel/ixgbe/ixgbe.ko

version: 6.1.4

This confirmed the current version of the driver that is running. The next part was to check this against the Intel release of the driver here: https://github.com/intel/ethernet-linux-ixgbe (ixgbe-6.2.5).

Since the versions do not match, the next step is to update the drivers using the steps outlined on the github repo.

Step 3 — Addressing issues

In my case, the kernel’s stock module reported only version: 6.14.0-1012-oem and failed to display the NVM version (0.00 0x0). After compiling and installing the official Intel driver release (ixgbe-6.2.5), the interface correctly reported both the updated driver and the actual firmware version.

If Secure Boot is enabled, the new module must be signed with a Machine Owner Key (MOK) before it can be loaded at boot. Otherwise, the kernel will silently reject it.

Now that the drivers were updated, I noticed that when attempting to place the system under a GPU load it crashed. Reviewing the hardware, I noticed that the NIC’s appeared in a firmware recovery state.

Firmware Lock-Up Behaviour on Linux

While testing under Linux (Mint 22.2, kernel 6.14.0-1012-oem), I confirmed that the Intel E610-XT2 NICs can enter a firmware recovery mode identical to what was previously observed in Windows. Once triggered, both network interfaces disappear from PCI enumeration, the LEDs remain solid, and only a complete AC power cycle restores functionality.

The lock-up occurs predictably under GPU-intensive or mixed compute-I/O workloads, such as local LLM inference or gaming benchmarks. Normal workloads like web browsing or iperf3 stress tests do not trigger the fault, indicating a correlation between PCIe concurrency, thermal load, and firmware responsiveness.

Example system log sequence:

ixgbe 0000:c5:00.0: Adapter removed

ixgbe 0000:c5:00.0: Firmware recovery mode detected. Limiting functionality.

ixgbe 0000:c5:00.1: Adapter removed

ixgbe 0000:c5:00.1 enp197s0f1np1: NETDEV WATCHDOG: transmit queue timed out

ixgbe 0000:c5:00.1 enp197s0f1np1: Fake Tx hang detected with timeout of 5 seconds

After this point, both ports are non-functional, and no reset (FLR, driver reload, or power state transition) recovers them. The kernel continues operating normally, but the NIC firmware remains latched in recovery mode.

| Observation | Details |

|---|---|

| Driver Module | ixgbe 6.2.5 (Intel out-of-tree release) |

| Firmware (NVM) | 1.30 / 1.3863.0 |

| Kernel | 6.14.0-1012-oem |

| Trigger | GPU load or mixed I/O stress |

| Failure Mode | Both NICs enter firmware recovery; LEDs solid; PCIe link lost |

| Recovery | Only full AC power removal restores functionality |

This demonstrates that the firmware lock-up condition is cross-platform, reproducible under both Windows and Linux, and not dependent on driver stack differences. The kernel logs confirm that the controller firmware internally transitions into a safe recovery personality, halting DMA operations and removing itself from the PCI bus.

At present, no configuration, offload tuning, or PCIe power-management adjustment fully mitigates the fault. The failure mechanism is consistent with a firmware-resident deadlock or microcontroller watchdog timeout within the E610’s embedded management logic.

Steps to Reproduce

To reproduce the error, I returned to Windows. Testing under Windows allows for more consistent reproduction by other users, given the visibility of system crashes and driver events. The test environment required a clean Windows installation with all current updates, drivers, and firmware applied.

The following outlines the exact procedure I used to reproduce the NIC lock-up condition. Because earlier synthetic benchmarks did not reliably trigger the fault, I introduced a GPU-intensive workload using the Final Fantasy XIV: Endwalker Benchmark, which consistently reproduced the issue under mixed GPU and network stress.

Goal: Replicate the NIC failure under controlled conditions using the Beelink AI 395 and Intel E610-XT2 adapters.

-

Clean Installation

- Install Windows 11 24H2 (build 26100) from official ISO media.

- Do not install third-party utilities or tuning software. Leave Defender and HVCI enabled.

-

Platform Firmware

- Flash Beelink BIOS v1.08 (supplied by Beelink Support).

- Load BIOS defaults, modify only fan profile settings.

-

NIC Firmware (NVM)

-

Run in elevated PowerShell:

.\nvmupdate64e.exe -iExpect a single E610-XT2 entry (shared flash).

-

Apply the vendor-supplied image to reach NVM 1.30, then reboot.

-

-

Driver Installation

- Install Intel Ethernet Adapter Driver Pack 30.4 (

ixw.sys v1.2.43.0). - Install AMD GPU Driver 25.9.1.

- Reboot after both installations.

- Install Intel Ethernet Adapter Driver Pack 30.4 (

-

Network Configuration

- Connect one 10 GbE port to an active switch.

- Disable or ignore Wi-Fi; it is not relevant to the failure condition. However, I did use it for testing Internet access during and after lock-ups.

-

Workload Trigger

- Launch Final Fantasy XIV: Endwalker Benchmark.

- Use “High (Desktop)” preset; one complete run is sufficient.

-

Expected Failure

- During or shortly after the benchmark, Windows crashes with

SYSTEM_THREAD_EXCEPTION_NOT_HANDLED (0x7E)inixw.sys, or - The system remains up, but both NIC LEDs freeze solid, adapters vanish from Device Manager, and only a full AC power removal restores functionality.

- MiniDump confirms a NULL/invalid pointer dereference inside the ICE driver’s teardown path.

- During or shortly after the benchmark, Windows crashes with

This fault reproduces even on a fully updated configuration (Windows 11 24H2 + BIOS v1.08 + E610 NVM 1.30 + Driver Pack 30.4). The benchmark’s mixed GPU/network workload reliably exercises the race condition responsible for the failure.

Benchmark Trigger Rationale

Unlike synthetic stress tests such as iperf3 or network loopback benchmarks, the Final Fantasy XIV: Endwalker Benchmark provided a repeatable, real-world workload that exercised the system’s GPU, CPU, and network subsystems concurrently. During the scene transitions and asset-loading phases, the engine performs large texture and geometry streaming operations while maintaining continuous telemetry updates and network activity.

This combination produces rapid Deferred Procedure Call (DPC) spikes, heavy interrupt moderation activity, and concurrent driver teardown routines within the Intel ICE stack. Under these conditions, firmware-driver desynchronisation in the E610-XT2’s early firmware (NVM 1.10–1.30) exposes a callback lifecycle flaw inside ixw.sys. The benchmark thus served as a deterministic and practical reproducer for the NIC crash, allowing consistent observation of the BSOD and subsequent adapter recovery failure.

Root Cause Analysis — Intel E610-XT2 NIC Behaviour (Windows)

Since deployments in both Windows and Linux had the hanging NIC, I switched over to Windows to attempt to work out what was happening and create a method to reproduce the events to create a lock up.

After extensive testing across multiple firmware, driver, and operating system versions, the failure behaviour of the Intel E610-XT2 network controllers in the Beelink AI 395 can be divided into two distinct fault domains:

- Initial BSOD and driver failure — caused by a mismatch between early firmware (NVM 1.10) and newer ICE driver branches (≥ v1.2.43.0).

- Persistent NIC lock-up — a deeper fault that continues even after firmware and driver alignment, suggesting a design-level or embedded-firmware defect.

1. Resolved Issue — Firmware/Driver Desynchronisation

The first fault manifested as a SYSTEM_THREAD_EXCEPTION_NOT_HANDLED (0x7E) BSOD in ixw.sys.

Analysis of kernel dumps confirmed a null pointer dereference during the ICE driver’s teardown sequence — a use-after-free condition triggered by incompatible callback logic between the driver and early NVM schemas.

Updating to NVM 1.30 aligned the firmware structures with the 30.x driver series and eliminated the BSOD. This resolved the software-level desynchronisation but did not address deeper hardware-stability problems.

2. Unresolved Issue — Firmware-Resident Lock-Up Under Load

Even with matched firmware (NVM 1.30) and drivers (v1.2.43.0), the NICs continue to enter a non-recoverable lock state during sustained mixed workloads such as the Final Fantasy XIV Benchmark or AI inference tasks. Observed behaviour includes:

- Solid link LEDs with no packet activity.

- Loss of PCIe function visibility in Device Manager and

lspci. - Failure to respond to PCIe FLR (Function-Level Reset), requiring complete AC power removal.

This pattern is consistent with a firmware or controller microcode hang, not a host driver fault. Both ports fail simultaneously because they share a single NVM image and management processor, indicating a stall in the internal AdminQ or DMA scheduler.

3. Probable Root Cause Classification

| Layer | Component | Status | Notes |

|---|---|---|---|

| Driver | ixw.sys (ICE 1.2.43.0) |

Stable post-NVM 1.30 | BSOD resolved |

| Firmware | NVM 1.30 | Operational but not robust | Persistent lock-ups under load |

| Hardware | E610-XT2 controller | Unconfirmed defect | Fails to recover from internal fault state |

| BIOS Integration | Beelink OEM layer | Limited visibility | No recovery hooks for NIC reset |

The evidence points to firmware or silicon-level deadlock in the embedded control path managing DMA queue teardown and watchdog timers. No host-side patch or configuration adjustment prevents recurrence.

4. Summary

The initial driver–firmware desynchronisation caused the early BSODs and was successfully resolved through firmware alignment.

However, the ongoing lock-up behaviour is a separate and more fundamental defect within the Intel E610-XT2’s embedded management logic.

This failure persists across operating systems and workloads, indicating that the controller firmware cannot recover once it enters an unrecoverable DMA or interrupt deadlock.

Further remediation must therefore come from Intel’s firmware engineering team in coordination with Beelink’s OEM BIOS integration, not through driver or configuration changes at the user level.

Future Work — Firmware and Hardware-Level Remediation

Although updating to NVM 1.30 and Intel’s Ethernet Driver Pack 30.4 eliminated the BSODs triggered by the firmware mismatch, the deeper and more persistent fault — complete NIC lock-up under sustained mixed workloads — remains unresolved. This behaviour suggests a firmware or hardware design flaw within the Intel E610-XT2 controller, rather than a configuration or software-only defect.

1. Persistent Failure Mechanism

Subsequent testing confirmed that the NICs can still enter a non-recoverable state where both ports freeze with solid link LEDs, disappear from PCI enumeration, and require a complete AC power removal to restore functionality. This occurs even after firmware, BIOS, and driver alignment, indicating the following:

- Shared NVM lockup: Both ports share a single flash region; a deadlock or watchdog timeout within the embedded firmware halts both interfaces simultaneously.

- PCIe recovery deficiency: The adapter fails to complete a functional reset through PCIe hot-reset or bus reinitialisation, suggesting incomplete error-handling logic in the firmware microcontroller.

- Thermal or concurrency sensitivity: Lockups occur most predictably during GPU-intensive or high-interrupt workloads (e.g., FF14 benchmark, AI inference, or large data transfers), implying interaction between power, PCIe, and interrupt domains.

The recurrence of this behaviour across both Windows and Linux confirms it as hardware-level or firmware-resident, not an OS driver anomaly.

2. Required Vendor Actions

Future corrective work must occur at the firmware and design level, coordinated between Intel (as controller manufacturer) and Beelink (as system integrator and OEM firmware custodian).Beelink and Intel have acknowledged the issue and are investigating potential remediations at the firmware and driver integration level. The following actions are required:

- Microcode-level review of the E610-XT2 management engine responsible for link state and DMA queue resets. Intel must determine why the controller fails to respond to PCIe function-level reset (FLR) once the fault occurs.

- Revised NVM release that explicitly includes recovery and watchdog routines for deadlock conditions in AdminQ or Tx/Rx descriptor teardown.

- OEM firmware synchronisation: Beelink must validate and re-sign Intel’s updated NVM images for secure flashing on the embedded NICs, ensuring users can safely deploy them without risk of signature lockout.

- Driver-firmware coordination: Intel’s ICE driver must introduce defensive checks for lost AdminQ or DMA responsiveness, enabling soft-reset rather than silent hang.

Until these changes occur, any fix remains temporary; the system is functionally vulnerable to recurrent NIC lockups under sustained mixed compute-I/O workloads.

The persistence of full NIC lock-ups despite driver and firmware alignment indicates that the defect lies within the controller’s embedded logic or reset sequencing, not in host OS interaction. As such, the issue cannot be mitigated through configuration changes or driver workarounds. Resolution will require a revised NVM release and validation at the hardware abstraction layer by Intel’s Ethernet division in cooperation with Beelink’s firmware team.

Community Workarounds (from Reddit)

The Reddit community has been actively testing and discussing potential mitigations for the Intel E610-XT2 NIC lock-up issue affecting Beelink GTR9 Pro units.

User reports confirm that the fault reproduces under both Windows and Linux, even with updated firmware and drivers.

Note: these are community-sourced experiments only — they do not resolve the root cause.

Possible Workaround 1 — Blacklist ixgbe (Does not work)

Prevent the ixgbe module from loading automatically.

- Create the file:

sudo nano /etc/modprobe.d/ixgbe-blacklist.conf - Add this line:

blacklist ixgbe - Update the initramfs:

sudo update-initramfs -u -k all - Reboot the system.

- Verify the driver is not loaded:

lsmod | grep ixgbe

| Test | Result |

|---|---|

| Expected result | The NIC driver remains unloaded. |

| Observed behaviour | The NICs still enumerate via PCIe and continue to hang under GPU load. |

| Status | Does not work. |

Possible Workaround 2 — Disable PCIe Devices Using udev (Testing)

Force removal of the affected NICs during PCI initialisation. This prevents the firmware from activating but also disables all wired networking.

- Create a new rule:

sudo nano /etc/udev/rules.d/70-disable-ixgbe.rules - Add the following lines, replacing

0000:c5:00.0and0000:c5:00.1with your actual PCI device addresses:ACTION=="add", KERNEL=="0000:c5:00.0", SUBSYSTEM=="pci", RUN+="/bin/sh -c 'echo 1 > /sys/bus/pci/devices/0000:c5:00.0/remove'" ACTION=="add", KERNEL=="0000:c5:00.1", SUBSYSTEM=="pci", RUN+="/bin/sh -c 'echo 1 > /sys/bus/pci/devices/0000:c5:00.1/remove'" - Reboot the system.

- Verify removal:

You should not see the Ethernet interfaces listed.

lspci

| Test | Result |

|---|---|

| Expected result | The NICs are unbound from the PCI bus before firmware initialisation. |

| Observed behaviour | System remains stable under GPU load, but wired networking is disabled. |

| Status | Diagnostic only (partial success). |

Community members confirmed that the same failure pattern appears on multiple distributions — including Fedora 43 (kernel 6.17, ixgbe 6.2.5) — showing that the problem is architecture-level rather than OS-specific.

Several users have temporarily resorted to USB or Thunderbolt NICs while awaiting an Intel firmware fix.

Possible Workaround 3 — Disable Advanced Error Reporting (AER) for Testing only

A potential untested workaround for Intel E610 firmware recovery loops is adding the kernel parameter pci=noaer, which disables PCI Express Advanced Error Reporting (AER). This can prevent false PCIe “surprise removal” or “link error” events that may trigger NIC resets—but it also suppresses legitimate error reporting, so use only for testing.

To apply:

sudo nano /etc/default/grub

# Add to existing line:

# GRUB_CMDLINE_LINUX_DEFAULT="quiet splash pci=noaer"

sudo update-grub

sudo reboot

To confirm after reboot:

sudo dmesg | grep -i aer

If no AER messages appear, the setting is active.

| Test | Result |

|---|---|

| Expected result | The NICs are active and not triggers see in logs. |

| Observed behaviour | System remains stable under GPU load, but wired networking maybe active. |

| Status | Failed same result. |

Possible Workaround 4 — Limiting the TDP fot he APU to 35w (Tested on Windows Only)

Note: Congrats to LukeD_NC on reddit who worked out this workaround

A potential windows only workaround for Intel E610 firmware recovery is to drop the TDP on the APU down to 35w. The information can be found over on reddit GTR9 Pro - temporary stability fix for Windows. This fix uses a tool ryzenadj to drop the TDP down to 35w, reudcing the overall load on the power bus. the result appears to be a stable Windows run on the FF14 benchmark.

The tool used in the post, does have a Linux version but I dont have reports of this being tested yet.

To apply:

- Download the tool from the git repo https://github.com/FlyGoat/RyzenAdj/ and run the following command on windows as an administrator.

ryzenadj -a 35000 -b 35000 -c 35000

| Test | Result |

|---|---|

| Expected result | TDP power reduced to 35w. |

| Observed behaviour | System remains stable under GPU load, FF14 benchmark completed run with no drops ont he NIC. |

| Status | Success on Windows, Untested on Linux. |

Perfect — here’s a new section written in the same structure, tone, and formatting as your existing workaround section, but adapted for Luke_NC’s Batocera/Linux fix. It’s cleaned up, consistent with your blog’s technical style, and academically precise.

Possible Workaround 5 — Disable Onboard 10 GbE Adapters via BIOS and Udev (Tested on Linux – Batocera)

Note: Credit to Luke_NC on Reddit for this workaround and testing under Batocera Linux.

A potential Linux-only workaround for the Intel E610 NIC instability is to disable the onboard 10 GbE adapters at the firmware level and remove them automatically during system boot. This prevents the kernel from initialising the faulty interfaces that cause lockups when accessed (for example, during an lspci scan).

This method was verified on Batocera Linux (kernel 6.15.11). Later builds such as batocera-x86_64-42-20251006.img.gz already disable the NICs by default, so this procedure only applies to earlier releases or other Linux distributions exhibiting the same crash behaviour.

To apply:

Step 1 — Adjust BIOS Settings

Path:

Advanced → AMD PBS → PCI Express Configurations

- Enable Power Sequence of Specific WWAN = Disabled

- PCIE x4 DT Slot Power Enable = Disabled

- WLAN Power Enable = Disabled

- WWAN Power Enable = Disabled

- GbE Power Enable = Disabled

Path:

Advanced → AMD PBS → Power Saving Configurations

- LOM D3Cold = Disabled

- Keep WLAN Power in S3/S4 State = Disabled

Path:

Advanced → PCI Subsystem Settings

- Re-Size BAR Support = Disabled

- SR-IOV Support = Disabled

- BME DMA Mitigation = Disabled

- Hot-Plug Support = Disabled

Path:

Advanced → Network Stack Configuration

- Network Stack = Disabled

- IPv4 PXE Support = Disabled

- IPv4 HTTP Support = Disabled

- IPv6 PXE Support = Disabled

Path:

Advanced → Demo Board → PCI-E Port

- PCI-E Port Control = Disabled

Step 2 — Create Udev Rules to Remove NICs on Boot

Boot into Batocera (or compatible Linux distro) and create a new rule file:

sudo nano /etc/udev/rules.d/70-disable-10gbe.rules

Add the following lines:

ACTION=="add", KERNEL=="0000:c4:00.0", SUBSYSTEM=="pci", RUN+="/bin/sh -c 'echo 1 > /sys/bus/pci/devices/0000:c4:00.0/remove'"

ACTION=="add", KERNEL=="0000:c4:00.1", SUBSYSTEM=="pci", RUN+="/bin/sh -c 'echo 1 > /sys/bus/pci/devices/0000:c4:00.1/remove'"

Then run:

batocera-save-overlay

reboot

After reboot, plug in a USB-C Ethernet adapter to restore network connectivity.

| Test | Result |

|---|---|

| Expected result | System boots without initialising onboard Intel E610 adapters. |

| Observed behaviour | System remains stable; lspci no longer triggers a kernel crash. |

| Status | Success on Batocera Linux; not applicable to latest Batocera builds or Windows. |

Conclusion

The Beelink AI 395 demonstrates strong hardware potential but exposes a critical flaw in Intel’s E610-XT2 network controller integration. Out of the box, the system shipped with mismatched driver and firmware baselines (ixw.sys 1.2.43.0 vs NVM 1.10), resulting in immediate BSODs and subsequent firmware lock-ups under network load. Aligning the firmware to NVM 1.30 and the drivers to Intel Ethernet Pack 30.4 resolved the Windows driver crash, yet the firmware-resident lock-up persists across both Windows and Linux when the GPU or PCIe subsystem is placed under sustained load.

The issue is now confirmed to be cross-platform and reproducible even after driver and firmware alignment, indicating a failure mechanism within the controller’s embedded microcode or PCIe recovery path. It cannot be mitigated through configuration changes, offload tuning, or driver substitutions.

Known-Good Configuration (Stable for Moderate Use)

| Component | Version / Detail | Status |

|---|---|---|

| System BIOS | v1.08 (Beelink OEM) | Required for firmware visibility |

| NIC Firmware | Intel E610 NVM 1.30 | Aligned with 30.x driver baseline |

| Windows Driver | ixw.sys 1.2.43.0 (Intel Pack 30.4) |

Stable; BSOD resolved |

| Linux Driver | ixgbe-6.2.5 (out-of-tree Intel build) |

Stable; firmware lock-up persists |

| Status | Stable for general workloads | Fails under GPU + network stress |

| Workaround | Use Wi-Fi or external USB 2.5/10 GbE adapters | Temporary measure |

Summary

The persistence of the NIC hang after complete driver and firmware alignment confirms that the fault lies within the controller’s embedded management firmware, not within operating-system drivers or configuration. Both ports fail simultaneously because they share a single NVM image and management engine.

Resolution will require a new Intel NVM release containing revised microcode and improved PCIe error-recovery logic, validated and re-signed by Beelink for end-user deployment. Until such a release is available, the GTR9 Pro remains reliable only under light to moderate workloads and should not be relied upon for sustained high-throughput or GPU-intensive network scenarios.

Postscript — October 2025

As of October 2025, this issue has been formally escalated to Intel’s Ethernet Division and Beelink’s firmware team. Both vendors have acknowledged the defect and are investigating a firmware-level remediation. I will update this article once a new NVM package or BIOS release becomes available for public testing.

Following extensive cross-platform testing and direct correspondence with Intel and Beelink, I concluded that the persistent firmware lock-ups affecting the integrated Intel E610-XT2 controllers are not resolvable through driver or configuration changes. Consequently, I returned the unit for a refund.

I will continue monitoring Beelink’s firmware releases and Intel’s NVM updates for this platform. If a stable revision becomes available, I may revisit the GTR9 Pro in a follow-up analysis covering firmware behaviour, PCIe recovery characteristics, and system thermals.

Images of GTR9 are taken directly from the Beelink website.

-

Beelink, GTR9 Pro AMD Ryzen AI MAX 395 Specifications, https://www.bee-link.com/products/beelink-gtr9-pro-amd-ryzen-ai-max-395?variant=47842426290418, accessed September 2025. ↩︎

-

Intel Community Forums, E610-XT2 BSOD NIC hang: firmware vs driver mismatch, https://community.intel.com/t5/Ethernet-Products/E610-XT2-BSOD-NIC-hang-firmware-vs-driver-mismatch/m-p/1718766, accessed September 2025. ↩︎